GISiL

Gesture Interpreter for Sign Language

Aim of the project is to bridge the gap and enable effortless communication between users of conventional language and sign language by utilizing cost effective mini-potentiometric pots in our gloves with 3D printed parts to detect finger movements. The gloves are paired with a smartphone to perform sign translation using machine learning and natural language processing to produce audio and visual output

- Runner’s Up at Sangam Hardware Hackathon 2021.

- Presented at MIDL 2022. (Short paper)

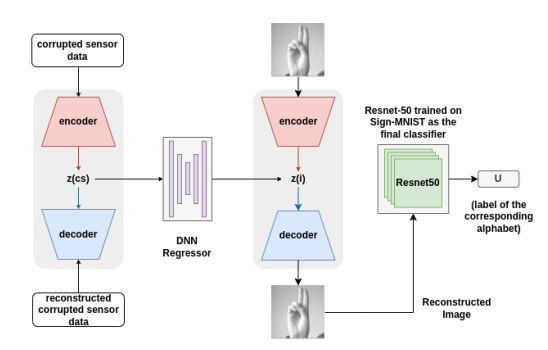

Using training data from publicly available images of American Sign Language (ASL) as source domain for inference on noisy sensor data by bridging the domain gaps through latent space transfer.

More information in our paper presentation.